etudes package¶

Subpackages¶

Submodules¶

etudes.cli module¶

Console script for etudes.

etudes.decorators module¶

etudes.gaussian_process module¶

Main module.

-

class

etudes.gaussian_process.GaussianProcessLayer(units, kernel_provider, num_inducing_points=64, mean_fn=None, jitter=1e-06, **kwargs)[source]¶ Bases:

tensorflow.python.keras.engine.base_layer.Layer- Attributes

activity_regularizerOptional regularizer function for the output of this layer.

dtypeDtype used by the weights of the layer, set in the constructor.

dynamicWhether the layer is dynamic (eager-only); set in the constructor.

inbound_nodesDeprecated, do NOT use! Only for compatibility with external Keras.

inputRetrieves the input tensor(s) of a layer.

input_maskRetrieves the input mask tensor(s) of a layer.

input_shapeRetrieves the input shape(s) of a layer.

input_specInputSpec instance(s) describing the input format for this layer.

lossesList of losses added using the add_loss() API.

metricsList of metrics added using the add_metric() API.

nameName of the layer (string), set in the constructor.

name_scopeReturns a tf.name_scope instance for this class.

- non_trainable_variables

non_trainable_weightsList of all non-trainable weights tracked by this layer.

outbound_nodesDeprecated, do NOT use! Only for compatibility with external Keras.

outputRetrieves the output tensor(s) of a layer.

output_maskRetrieves the output mask tensor(s) of a layer.

output_shapeRetrieves the output shape(s) of a layer.

- stateful

submodulesSequence of all sub-modules.

supports_maskingWhether this layer supports computing a mask using compute_mask.

- trainable

trainable_variablesSequence of trainable variables owned by this module and its submodules.

trainable_weightsList of all trainable weights tracked by this layer.

updatesDEPRECATED FUNCTION

variablesReturns the list of all layer variables/weights.

weightsReturns the list of all layer variables/weights.

Methods

__call__(*args, **kwargs)Wraps call, applying pre- and post-processing steps.

add_loss(losses, **kwargs)Add loss tensor(s), potentially dependent on layer inputs.

add_metric(value[, name])Adds metric tensor to the layer.

add_update(updates[, inputs])Add update op(s), potentially dependent on layer inputs.

add_variable(*args, **kwargs)Deprecated, do NOT use! Alias for add_weight.

add_weight([name, shape, dtype, …])Adds a new variable to the layer.

apply(inputs, *args, **kwargs)Deprecated, do NOT use! (deprecated)

build(input_shape)Creates the variables of the layer (optional, for subclass implementers).

call(x)This is where the layer’s logic lives.

compute_mask(inputs[, mask])Computes an output mask tensor.

compute_output_shape(input_shape)Computes the output shape of the layer.

compute_output_signature(input_signature)Compute the output tensor signature of the layer based on the inputs.

count_params()Count the total number of scalars composing the weights.

from_config(config)Creates a layer from its config.

get_config()Returns the config of the layer.

get_input_at(node_index)Retrieves the input tensor(s) of a layer at a given node.

get_input_mask_at(node_index)Retrieves the input mask tensor(s) of a layer at a given node.

get_input_shape_at(node_index)Retrieves the input shape(s) of a layer at a given node.

get_losses_for(inputs)Deprecated, do NOT use! (deprecated)

get_output_at(node_index)Retrieves the output tensor(s) of a layer at a given node.

get_output_mask_at(node_index)Retrieves the output mask tensor(s) of a layer at a given node.

get_output_shape_at(node_index)Retrieves the output shape(s) of a layer at a given node.

get_updates_for(inputs)Deprecated, do NOT use! (deprecated)

get_weights()Returns the current weights of the layer.

set_weights(weights)Sets the weights of the layer, from Numpy arrays.

with_name_scope(method)Decorator to automatically enter the module name scope.

-

build(input_shape)[source]¶ Creates the variables of the layer (optional, for subclass implementers).

This is a method that implementers of subclasses of Layer or Model can override if they need a state-creation step in-between layer instantiation and layer call.

This is typically used to create the weights of Layer subclasses.

- Arguments:

- input_shape: Instance of TensorShape, or list of instances of

TensorShape if the layer expects a list of inputs (one instance per input).

-

call(x)[source]¶ This is where the layer’s logic lives.

Note here that call() method in tf.keras is little bit different from keras API. In keras API, you can pass support masking for layers as additional arguments. Whereas tf.keras has compute_mask() method to support masking.

- Arguments:

inputs: Input tensor, or list/tuple of input tensors. **kwargs: Additional keyword arguments. Currently unused.

- Returns:

A tensor or list/tuple of tensors.

-

compute_output_shape(input_shape)[source]¶ Computes the output shape of the layer.

If the layer has not been built, this method will call build on the layer. This assumes that the layer will later be used with inputs that match the input shape provided here.

- Arguments:

- input_shape: Shape tuple (tuple of integers)

or list of shape tuples (one per output tensor of the layer). Shape tuples can include None for free dimensions, instead of an integer.

- Returns:

An input shape tuple.

-

class

etudes.gaussian_process.KernelWrapper(input_dim=1, kernel_cls=<class 'tensorflow_probability.python.math.psd_kernels.exponentiated_quadratic.ExponentiatedQuadratic'>, dtype=None, **kwargs)[source]¶ Bases:

tensorflow.python.keras.engine.base_layer.Layer- Attributes

activity_regularizerOptional regularizer function for the output of this layer.

dtypeDtype used by the weights of the layer, set in the constructor.

dynamicWhether the layer is dynamic (eager-only); set in the constructor.

inbound_nodesDeprecated, do NOT use! Only for compatibility with external Keras.

inputRetrieves the input tensor(s) of a layer.

input_maskRetrieves the input mask tensor(s) of a layer.

input_shapeRetrieves the input shape(s) of a layer.

input_specInputSpec instance(s) describing the input format for this layer.

- kernel

lossesList of losses added using the add_loss() API.

metricsList of metrics added using the add_metric() API.

nameName of the layer (string), set in the constructor.

name_scopeReturns a tf.name_scope instance for this class.

- non_trainable_variables

non_trainable_weightsList of all non-trainable weights tracked by this layer.

outbound_nodesDeprecated, do NOT use! Only for compatibility with external Keras.

outputRetrieves the output tensor(s) of a layer.

output_maskRetrieves the output mask tensor(s) of a layer.

output_shapeRetrieves the output shape(s) of a layer.

- stateful

submodulesSequence of all sub-modules.

supports_maskingWhether this layer supports computing a mask using compute_mask.

- trainable

trainable_variablesSequence of trainable variables owned by this module and its submodules.

trainable_weightsList of all trainable weights tracked by this layer.

updatesDEPRECATED FUNCTION

variablesReturns the list of all layer variables/weights.

weightsReturns the list of all layer variables/weights.

Methods

__call__(*args, **kwargs)Wraps call, applying pre- and post-processing steps.

add_loss(losses, **kwargs)Add loss tensor(s), potentially dependent on layer inputs.

add_metric(value[, name])Adds metric tensor to the layer.

add_update(updates[, inputs])Add update op(s), potentially dependent on layer inputs.

add_variable(*args, **kwargs)Deprecated, do NOT use! Alias for add_weight.

add_weight([name, shape, dtype, …])Adds a new variable to the layer.

apply(inputs, *args, **kwargs)Deprecated, do NOT use! (deprecated)

build(input_shape)Creates the variables of the layer (optional, for subclass implementers).

call(x)This is where the layer’s logic lives.

compute_mask(inputs[, mask])Computes an output mask tensor.

compute_output_shape(input_shape)Computes the output shape of the layer.

compute_output_signature(input_signature)Compute the output tensor signature of the layer based on the inputs.

count_params()Count the total number of scalars composing the weights.

from_config(config)Creates a layer from its config.

get_config()Returns the config of the layer.

get_input_at(node_index)Retrieves the input tensor(s) of a layer at a given node.

get_input_mask_at(node_index)Retrieves the input mask tensor(s) of a layer at a given node.

get_input_shape_at(node_index)Retrieves the input shape(s) of a layer at a given node.

get_losses_for(inputs)Deprecated, do NOT use! (deprecated)

get_output_at(node_index)Retrieves the output tensor(s) of a layer at a given node.

get_output_mask_at(node_index)Retrieves the output mask tensor(s) of a layer at a given node.

get_output_shape_at(node_index)Retrieves the output shape(s) of a layer at a given node.

get_updates_for(inputs)Deprecated, do NOT use! (deprecated)

get_weights()Returns the current weights of the layer.

set_weights(weights)Sets the weights of the layer, from Numpy arrays.

with_name_scope(method)Decorator to automatically enter the module name scope.

-

call(x)[source]¶ This is where the layer’s logic lives.

Note here that call() method in tf.keras is little bit different from keras API. In keras API, you can pass support masking for layers as additional arguments. Whereas tf.keras has compute_mask() method to support masking.

- Arguments:

inputs: Input tensor, or list/tuple of input tensors. **kwargs: Additional keyword arguments. Currently unused.

- Returns:

A tensor or list/tuple of tensors.

-

property

kernel¶

-

class

etudes.gaussian_process.VariationalGaussianProcessScalar(kernel_wrapper, num_inducing_points, inducing_index_points_initializer, mean_fn=None, jitter=1e-06, convert_to_tensor_fn=<function Distribution.sample>, **kwargs)[source]¶ Bases:

tensorflow_probability.python.layers.distribution_layer.DistributionLambda- Attributes

activity_regularizerOptional regularizer function for the output of this layer.

dtypeDtype used by the weights of the layer, set in the constructor.

dynamicWhether the layer is dynamic (eager-only); set in the constructor.

inbound_nodesDeprecated, do NOT use! Only for compatibility with external Keras.

inputRetrieves the input tensor(s) of a layer.

input_maskRetrieves the input mask tensor(s) of a layer.

input_shapeRetrieves the input shape(s) of a layer.

input_specInputSpec instance(s) describing the input format for this layer.

lossesList of losses added using the add_loss() API.

metricsList of metrics added using the add_metric() API.

nameName of the layer (string), set in the constructor.

name_scopeReturns a tf.name_scope instance for this class.

- non_trainable_variables

non_trainable_weightsList of all non-trainable weights tracked by this layer.

outbound_nodesDeprecated, do NOT use! Only for compatibility with external Keras.

outputRetrieves the output tensor(s) of a layer.

output_maskRetrieves the output mask tensor(s) of a layer.

output_shapeRetrieves the output shape(s) of a layer.

- stateful

submodulesSequence of all sub-modules.

supports_maskingWhether this layer supports computing a mask using compute_mask.

- trainable

trainable_variablesSequence of trainable variables owned by this module and its submodules.

trainable_weightsList of all trainable weights tracked by this layer.

updatesDEPRECATED FUNCTION

variablesReturns the list of all layer variables/weights.

weightsReturns the list of all layer variables/weights.

Methods

__call__(inputs, *args, **kwargs)Wraps call, applying pre- and post-processing steps.

add_loss(losses, **kwargs)Add loss tensor(s), potentially dependent on layer inputs.

add_metric(value[, name])Adds metric tensor to the layer.

add_update(updates[, inputs])Add update op(s), potentially dependent on layer inputs.

add_variable(*args, **kwargs)Deprecated, do NOT use! Alias for add_weight.

add_weight([name, shape, dtype, …])Adds a new variable to the layer.

apply(inputs, *args, **kwargs)Deprecated, do NOT use! (deprecated)

build(input_shape)Creates the variables of the layer (optional, for subclass implementers).

call(inputs, *args, **kwargs)This is where the layer’s logic lives.

compute_mask(inputs[, mask])Computes an output mask tensor.

compute_output_signature(input_signature)Compute the output tensor signature of the layer based on the inputs.

count_params()Count the total number of scalars composing the weights.

from_config(config[, custom_objects])Creates a layer from its config.

get_config()Returns the config of this layer.

get_input_at(node_index)Retrieves the input tensor(s) of a layer at a given node.

get_input_mask_at(node_index)Retrieves the input mask tensor(s) of a layer at a given node.

get_input_shape_at(node_index)Retrieves the input shape(s) of a layer at a given node.

get_losses_for(inputs)Deprecated, do NOT use! (deprecated)

get_output_at(node_index)Retrieves the output tensor(s) of a layer at a given node.

get_output_mask_at(node_index)Retrieves the output mask tensor(s) of a layer at a given node.

get_output_shape_at(node_index)Retrieves the output shape(s) of a layer at a given node.

get_updates_for(inputs)Deprecated, do NOT use! (deprecated)

get_weights()Returns the current weights of the layer.

set_weights(weights)Sets the weights of the layer, from Numpy arrays.

with_name_scope(method)Decorator to automatically enter the module name scope.

compute_output_shape

new

-

build(input_shape)[source]¶ Creates the variables of the layer (optional, for subclass implementers).

This is a method that implementers of subclasses of Layer or Model can override if they need a state-creation step in-between layer instantiation and layer call.

This is typically used to create the weights of Layer subclasses.

- Arguments:

- input_shape: Instance of TensorShape, or list of instances of

TensorShape if the layer expects a list of inputs (one instance per input).

-

etudes.gaussian_process.dataframe_from_gp_samples(gp_samples_arr, X_q, amplitude, length_scale, n_samples)[source]¶

etudes.initializers module¶

-

class

etudes.initializers.KMeans(inputs, seed=None)[source]¶ Bases:

etudes.initializers.SubsetInitializerMethods

__call__(shape[, dtype])Returns a tensor object initialized as specified by the initializer.

from_config(config)Instantiates an initializer from a configuration dictionary.

get_config()Returns the configuration of the initializer as a JSON-serializable dict.

compute_subset

-

class

etudes.initializers.RandomSubset(inputs, seed=None)[source]¶ Bases:

etudes.initializers.SubsetInitializerMethods

__call__(shape[, dtype])Returns a tensor object initialized as specified by the initializer.

from_config(config)Instantiates an initializer from a configuration dictionary.

get_config()Returns the configuration of the initializer as a JSON-serializable dict.

compute_subset

-

class

etudes.initializers.SubsetInitializer(inputs, seed=None)[source]¶ Bases:

tensorflow.python.keras.initializers.initializers_v2.InitializerMethods

__call__(shape[, dtype])Returns a tensor object initialized as specified by the initializer.

from_config(config)Instantiates an initializer from a configuration dictionary.

get_config()Returns the configuration of the initializer as a JSON-serializable dict.

etudes.math module¶

-

etudes.math.divergence_gauss_hermite(p, q, quadrature_size, under_p=True, discrepancy_fn=<function kl_forward>)[source]¶ - Compute D_f[p || q]

= E_{q(x)}[f(p(x)/q(x))] = E_{p(x)}[r(x)^{-1} f(r(x))] – r(x) = p(x)/q(x) = E_{p(x)}[exp(-log r(x)) g(log r(x))] – g(.) = f(exp(.)) = E_{p(x)}[h(x)] – h(x) = exp(-log r(x)) g(log r(x))

using Gauss-Hermite quadrature assuming p(x) is Gaussian. Note discrepancy_fn corresponds to function g.

-

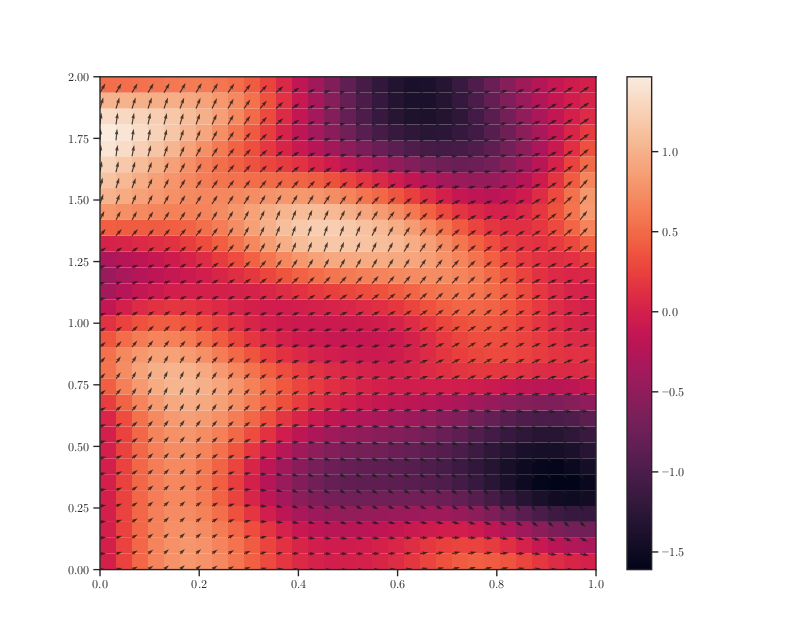

etudes.math.perlin(x, y, octaves=1, persistence=0.5, lacunarity=2.0, repeatx=1024, repeaty=1024, base=0.0)[source]¶ Vectorized light wrapper.

Examples

# from etudes.math import perlin from noise import pnoise2 step_size = 2.0 y, x = np.ogrid[0:2:32j, 0:1:32j] X, Y = np.broadcast_arrays(x, y) Z = np.vectorize(pnoise2)(x, y, octaves=2) theta = 2.0 * np.pi * Z dx = step_size * np.cos(theta) dy = step_size * np.sin(theta) fig, ax = plt.subplots(figsize=(10, 8)) contours = ax.pcolormesh(X, Y, theta) ax.quiver(x, y, x + dx, y + dy, alpha=0.8) fig.colorbar(contours, ax=ax) plt.show()

(Source code, png, hires.png, pdf)

etudes.plotting module¶

Plotting module.

etudes.utils module¶

-

class

etudes.utils.DistributionPair(p, q)[source]¶ Bases:

objectMethods

make_p_log_prob_estimator(logit_estimator)Recall log r(x) = log p(x) - log q(x). Then, we have,

density_ratio

divergence_monte_carlo

from_covariate_shift_example

kl_divergence

logit

make_classification_dataset

make_covariate_shift_dataset

make_dataset

optimal_accuracy

optimal_score

-

make_covariate_shift_dataset(class_posterior_fn, num_test, num_train, threshold=0.5, seed=None)[source]¶

-

-

class

etudes.utils.DistributionPairGaussian(q, p_loc=0.0, p_scale=1.0)[source]¶ Bases:

etudes.utils.DistributionPairMethods

By definition, r(x) q(x) = p(x).

make_p_log_prob_estimator(logit_estimator)Recall log r(x) = log p(x) - log q(x). Then, we have,

density_ratio

divergence_gauss_hermite

divergence_monte_carlo

from_covariate_shift_example

kl_divergence

logit

make_classification_dataset

make_covariate_shift_dataset

make_dataset

optimal_accuracy

optimal_score

-

kl_divergence_scaled_distribution(logit_estimator, quadrature_size)[source]¶ By definition, r(x) q(x) = p(x). That is, we can think of r(x) as the scaling factor needed to match q(x) to p(x). Let hat{p}(x) = hat{r}(x) q(x). Then, hat{p}(x) ~= p(x) and how good this estimate is depends entirely on how well hat{r}(x) estimates r(x). This function computes KL[p(x) || hat{p}(x)] >= 0 using Gauss-Hermite quadrature assuming p(x) is Gaussian. The lower the better, with equality at p(x) == hat{p}(x).

-

-

etudes.utils.make_plot_data(names, seeds, summary_dir, process_run_fn=None, extract_series_fn=None, seed_key='seed', y_key='nelbo')[source]¶

-

etudes.utils.merge_stack_runs(series_dict, seed_key='seed', y_key='nelbo', drop_until_all_start=False)[source]¶

Module contents¶

Top-level package for Machine Learning Etudes.